AI Assistants: A Balancing Act Between Data Security and Technological Progress

Simon Krannig, data scientist at ADACOR, shares insights into the transformative potential of open-source AI Assistants.

© SmileStudioAP | istockphoto.com

Simon Krannig, Data Scientist at ADACOR, shares insights into the transformative potential of open-source AI Assistants. These tools, when effectively linked with your own business documents, could reshape the way your company operates and it can be achieved without jeopardizing your sensitive data.

Impressive strides in language-based AI services such as OpenAI’s ChatGPT, Microsoft Bing Chat, and Google Bard have led to an incredible adoption rate of AI technology. These advancements have sparked the interest of businesses, creating many ideas on how AI models can be harnessed to enhance operational efficiency and customer experience. However, these opportunities come with a significant challenge: How can businesses make full use of AI models without risking the security of their sensitive data and internal documents by sharing them with external services?

Understanding AI Language Models

AI Language Models, colloquially known as Large Language Models (LLMs), are designed to learn from an incredibly vast volume of data. Leading AI services like OpenAI’s ChatGPT or Google Bard feed their models with data so extensively that it rivals the collective content available on the Internet. The computing power required to process this immense amount of information is astounding. The training process often deploys an array of hundreds or even thousands of Graphics Processing Units (GPUs), which are kept in operation for several weeks. This makes these models some of the most resource-intensive in the field of AI. But what does the learning process look like for these models?

When it comes to text-generating LLMs, the underlying principle is elegantly simple. Given an input text, these models are tasked with predicting the most likely subsequent sequence of words. For instance, if the input is “Paris is the capital of …”, the model would, based on its previous training, suggest that a likely continuation is “France” or, less likely, “fashion”. This feature isn’t unfamiliar to most of us, as it mirrors the autocomplete functionality on our smartphones.

With additional rounds of training on data designed to mimic conversations, the model becomes skilled at continuing any user query, as if it were engaged in a natural dialogue. Afterwards, substantial human feedback and fine-tuning ensure that the model provides responses that are not only accurate but also user-friendly.

This unique setup gives LLMs the capability to carry out a wide array of instructions – from offering coding advice and simplifying complex topics and many more.

Infusing your data into existing models

Despite these innovative capabilities, there is one notable drawback to these models: they can only respond based on the information present in the data used to train them. This limitation excludes two critical sources of information.

- Firstly, it doesn’t account for any recent events, news, discoveries, or discussions that happened after the model’s last training session.

- Secondly, any non-public data such as internal business documents will remain inaccessible to the model. As a result, the model will not be able to answer questions related to your specific business operations.

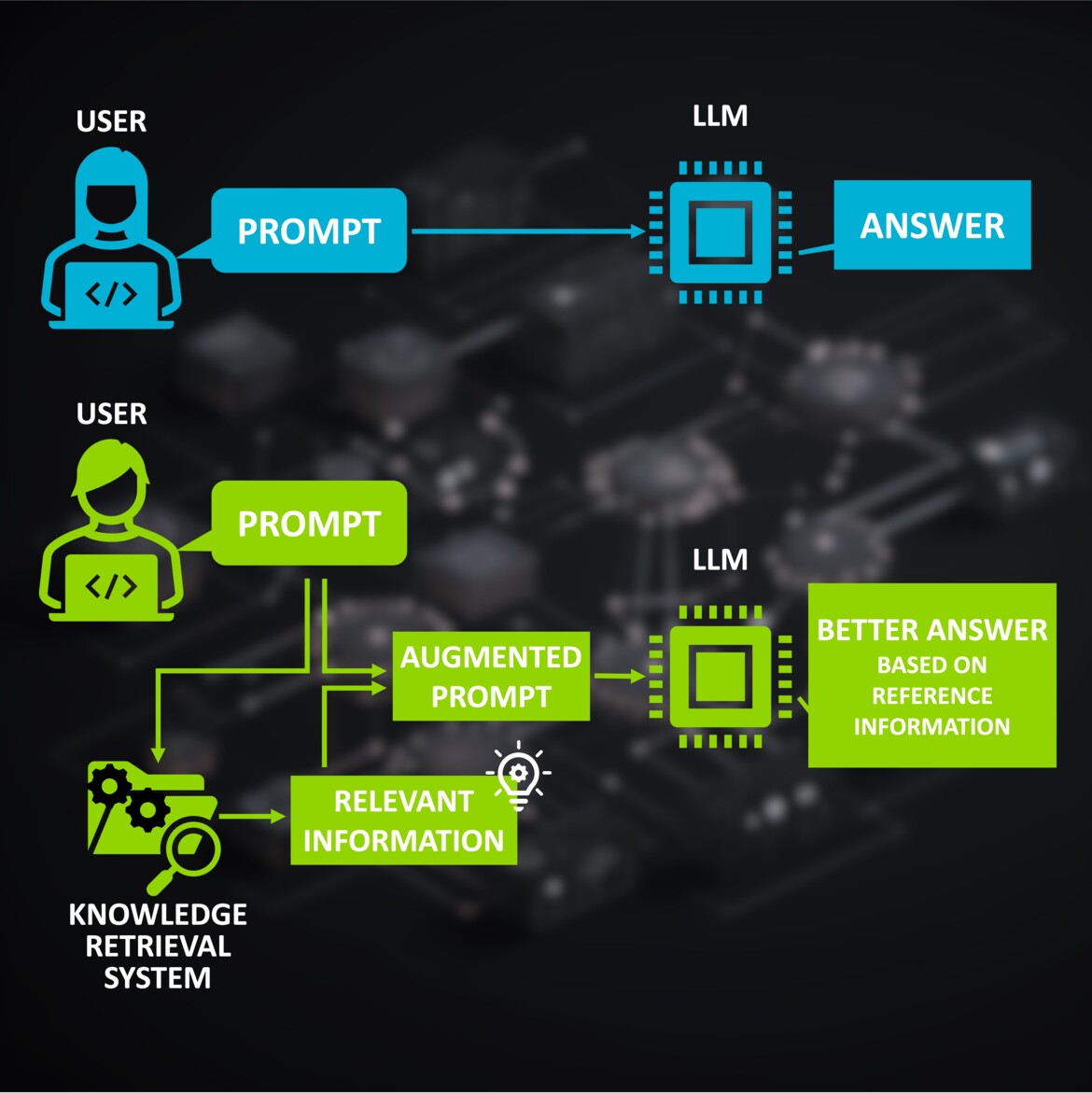

Are we then stuck with LLMs that can only scratch the surface of the information most relevant to us? Fortunately, the answer is “no”. The solution is deceptively simple: When we feed a question to the model, we also include the corresponding reference information. This could be anything from a news article to a private document. If it encompasses the relevant information, the LLM will take it into account and use it to answer our queries.

This process would require us to know about the relevant reference information beforehand, but it can be made more useful with an automated knowledge retrieval system. Such a system serves as a bridge between the user’s question and the model’s response. It functions like a search engine for our custom documents, enabling us to find PDFs, protocols, source code, and any other text-based documents. The system then ranks these documents based on how relevant they are to the user’s query, and augments the original prompt with the most relevant pieces.

Augmenting LLM prompts with an automated knowledge retrieval system

Knowledge retrieval systems can be categorized into numerous types, yet when it comes to enhancing the prompting of conversational LLMs, semantic search engines take center stage. They rely on “Embedding LLMs”, which are designed with the specific focus of numerically encoding the semantics of a given text. The semantic information from every piece of text in your knowledge-base is then stored in a vector database. This interplay between Embedding LLMs and semantic search engines effectively transforms the way we utilize and interact with conversational LLMs.

Open source versus AI-as-a-Service

While the idea of integrating such a custom search engine with an LLM is attractive, it does bring up a considerable caveat. Each time we use this setup, we are potentially transmitting a large amount of private data to the entity that owns and operates the Model or the AI Service. Keep in mind that every single prompt is now packed with raw data from your knowledge retrieval system. Therefore, any hesitations we might have had about using major AI Services like OpenAI’s ChatGPT or Google Bard due to privacy concerns should now be even more acute.

The solution to this predicament may initially seem infeasible. We could consider hosting our very own LLM, thus taking ownership and control of the model to ensure that data security measures are maintained at all times and that private data never leaves our systems. However, given that training such a model requires a tremendous amount of raw data, tons of human feedback, and incredible amounts of computing power, the feasibility of this approach may appear questionable at first.

The answer to this dilemma, however, resides in the rapidly expanding open-source community, which is devoted to the development and enhancement of ready-to-use LLMs. Open source brings with it the promise of democratized access, allowing individuals and organizations to harness the power of AI while maintaining control over their data.

Navigating the open-source ecosystem

Open-source models tend to be smaller in comparison to their proprietary counterparts. In context, GPT-3 already boasts a whopping 175 billion model parameters, whereas Google Bard and GPT-4 scale this up by a factor of 3 to 6 times. Most open-source models, in contrast, typically fall within the range of 3 billion to 40 billion parameters. This size discrepancy, however, does not dampen their effectiveness or potential. It does, however, tremendously reduce the required compute resources.

The open-source landscape is divided into two main camps. The first group focuses on the meticulous development of foundational LLMs such as EleutherAI’s Pythia, MosaicML’s MPT, and the Falcon model from the Technology Innovation Institute. These are typically text-continuation models that are yet to be fine-tuned on conversational data, but their training consumes the largest amount of computational resources in the entire training lifecycle of LLMs.

The second group then takes these foundation models and fine-tunes them for various tasks, including the described conversational text-generation style. While not all of these models are licensed for free commercial use, a considerable number of them indeed are.

This results in a multitude of openly available, ready-to-use models, optimized for various use cases. They can be hosted by any individual or business, securely integrated with knowledge retrieval systems, and are continually developed and improved at an impressively fast pace.

The compute requirements for most open-source models are remarkably modest – a cloud virtual machine equipped with a single high-end GPU is generally sufficient. This low entry barrier makes it feasible for even small businesses and startups to tap into the potential of modern AI while offering an alternative path for those who prefer not to share their data with major AI service providers.

Simon Krannig is Data Scientist at Adacor Hosting. His background is in Applied Mathematics and Machine Learning, with a strong focus for ML- and AI-Ops and the related Data Engineering tasks. He is responsible for designing cloud based end-to-end Data and AI solutions to customers while communicating current trends in the field of Data and AI to his colleagues.

Please note: The opinions expressed in Industry Insights published by dotmagazine are the author’s or interview partner’s own and do not necessarily reflect the view of the publisher, eco – Association of the Internet Industry.