Artificial Intelligence and IT Security - More Security, More Threats

Prof. Norbert Pohlmann from eco investigates the dual role of AI as both a powerful security asset and a potential attack vector. He highlights the importance of securing AI systems while underscoring the need for human oversight in critical areas to ensure safety and trust.

© Peach_iStock| istockphoto.com

IT security and Artificial Intelligence (AI) are two interconnected technologies that profoundly influence each other. This article begins by outlining key classifications, definitions, and principles of AI. We then explore how AI can enhance IT security, while also addressing how attackers can leverage AI to compromise systems. Lastly, the article examines the critical issue of securing AI itself, detailing the measures needed to protect AI systems from manipulation by attackers.

Classification of Artificial Intelligence

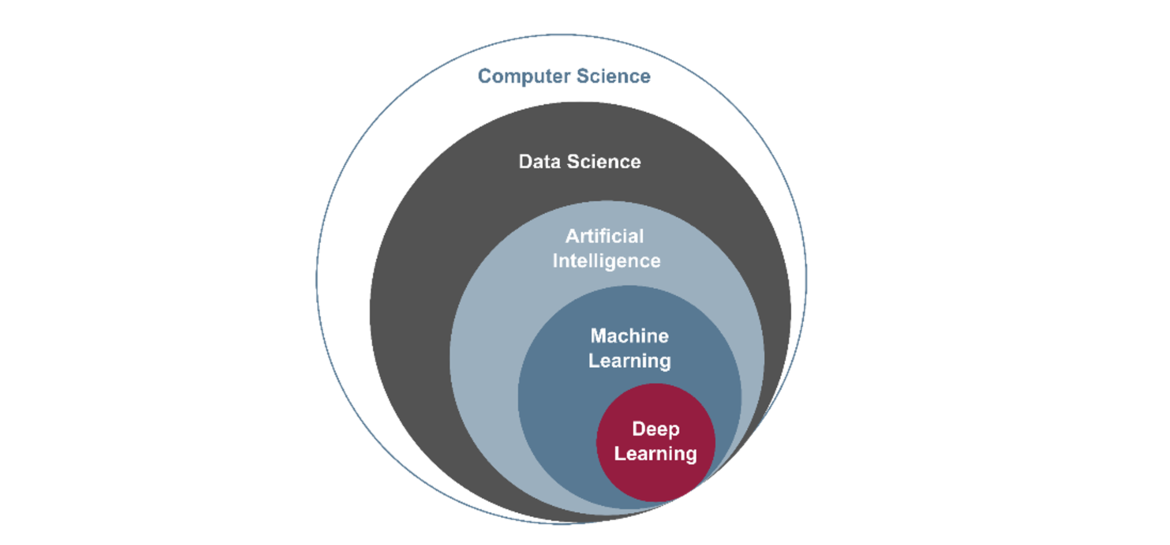

“Data Science” is a field of computer science that deals with extracting knowledge from information in data (see Figure 1). As data proliferates, an increasing degree of knowledge can be derived from the information in the data.

Fig. 1: Classification of Artificial Intelligence

A distinction is made between weak and strong AI. Strong AI, also known as Artificial General Intelligence (AGI), refers to the hypothetical point where AI systems surpass human-level intelligence. At this “singularity” point, AI could rapidly improve itself in unpredictable ways, potentially leading to a loss of human control. Humanity would lose control over the AI, making the future unpredictable and potentially having negative consequences. Our common task must therefore be to ensure that AI systems that surpass human intelligence act in harmony with human values and goals.

In contrast, weak AI, exemplified by Machine Learning (ML), is currently successful due to innovations like Deep Learning and Large Language Models (LLMs). In the field of machine learning, the term “stochastic parrot” is a metaphor to describe the theory that a large language model can plausibly generate text but does not understand their meaning. Therefore, a large language model cannot identify errors and humans must be able to verify the results themselves when using the large language model. Large Language Models (LLM) are the basis for generative AI.

Generative AI (GenAI), such as ChatGPT, creates various types of content and has revolutionized digitalization.

Paradigm – The Garbage In, Garbage Out (GIGO)

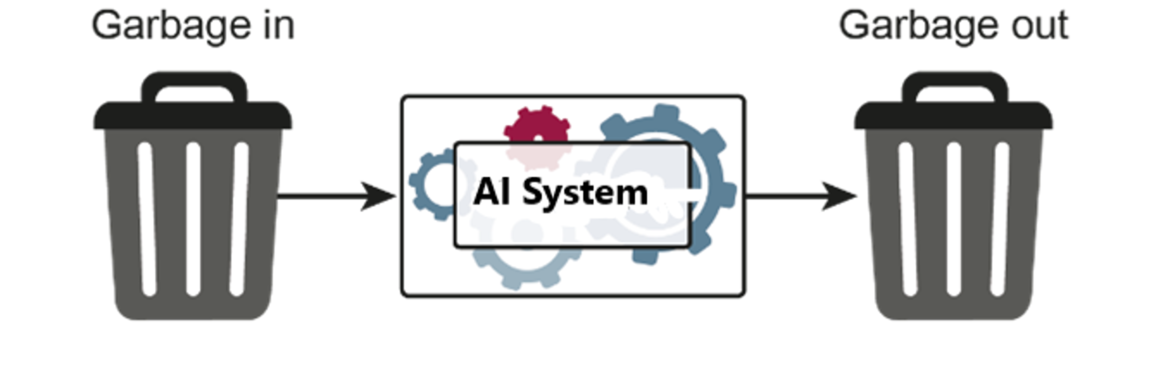

The quality of data is paramount in AI. The GIGO paradigm (see Figure 2) emphasizes that the quality of AI results depends directly on the standard of input data. This means that poor data leads to poor outcomes, while high-quality data is essential for trustworthy AI results.

Fig. 2: Garbage in, Garbage out

Several factors influence quality of input data:

- Completeness: AI systems must have access to full, relevant datasets to make accurate decisions. In cybersecurity, for instance, a system should have data on various types of cyberattacks to detect them effectively.

- Representativeness: The data must reflect real-world scenarios, ensuring that AI can generalize across different contexts.

- Traceability: Knowing the source and transformation process of the data helps ensure its reliability.

- Timeliness: AI needs up-to-date data to predict current threats. Old data can lead to missed vulnerabilities.

- Correctness: Accurate data labels and categories are crucial to prevent misleading results, especially in sensitive applications like malware detection.

Overall, ensuring high-quality data is essential to leveraging AI effectively in IT security.

Handling AI results

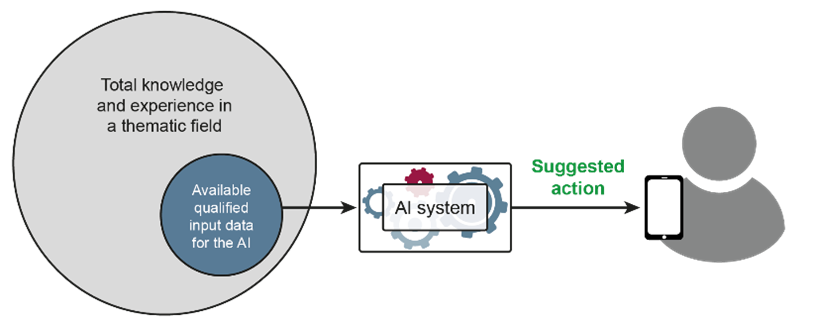

In the principle of “Keep the human in the loop,” AI results are understood as a recommendation / suggestion for action for the user (see Figure 3). AI is a powerful tool, but it should not operate autonomously in all situations. Keeping the human in the loop is critical in IT security to avoid potential misjudgments by AI. While AI can detect patterns or anomalies very fast in vast data sets, human expertise is needed to validate its findings. False positives, such as an unusual but harmless network activity, require human judgment for accurate interpretation.

Fig. 3: Keep the human in the loop

However, in time-sensitive contexts, autonomous decision-making by AI can be valuable. AI systems can automatically adjust firewalls or isolate compromised systems during an active cyberattack. Although this reduces response time, human oversight is still necessary to ensure that these automatic actions don’t lead to service disruptions or unintended consequences.

AI for IT Security

The use of AI for IT security creates significant added value for the protection of companies and organizations. Two application areas are presented below as examples:

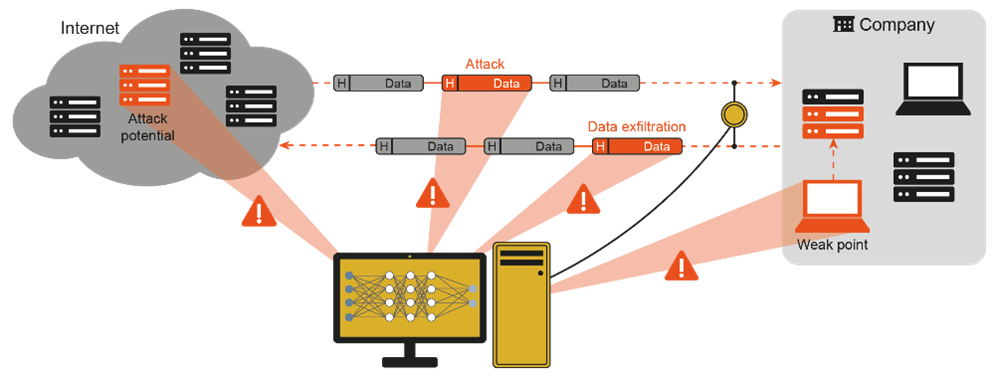

1. Increasing the detection rate of attacks: The increase in the detection rate of attacks involves, for instance, using adaptive AI models and gathering security-relevant data from networks and IT systems to identify threats early across devices, servers, IoT, and cloud applications (see Figure 4).

Fig. 4: Detection of attacks

2. Support and relief of IT security experts of which we do not have enough: In detecting IT security incidents, AI can analyze and prioritize large volumes of security-relevant data, helping IT security experts to focus on the most critical threats. Furthermore, with (partial) autonomy in reactions, AI can automatically adjust firewall and email rules during an attack, minimizing the attack surface and ensuring essential business processes are retained.

Attackers use AI

Just as AI strengthens defenses, it also provides cybercriminals with more advanced tools for launching sophisticated attacks. These include:

- Identifying attack vectors: AI is used to identify vulnerabilities in IT systems, revealing potential attack opportunities for hackers to exploit.

- Overcoming IT security measures: AI helps attackers analyze and bypass intrusion detection systems by detecting thresholds or patterns to implement undetected attacks.

- Automated attacks: AI-driven social bots create fake accounts, fake news, and manipulate people through targeted content, including generating high-quality images and profiles for infiltration in appropriated filter bubbles or echo chambers.

- Social engineering: AI, especially large language models (LLMs), can imitate writing styles, making social engineering attacks like spear-phishing more convincing, with potential use in deepfake audio or video attacks.

- Accelerated development of attacks: LLMs like ChatGPT accelerate the development of cyberattacks, including polymorphic malware, which changes code to evade traditional signature-based detection methods.

- Confidential information as input for a central service: Entering confidential information into AI systems poses a security risk, as the data could be used for further training, and it also creates a new attack surface that could be exploited.

IT security for AI

AI systems themselves are vulnerable to attacks and securing them is a growing concern. Key threats include:

- Data integrity: AI relies on massive datasets for training and operation. Compromised data can lead to incorrect or harmful AI decisions. Techniques like cryptographic checksums can verify data integrity.

- Data confidentiality: Sensitive data, often used to train AI systems, must be encrypted and protected from unauthorized access.

- Data protection: If personal data is involved, this must also be protected using the latest technological standards.

- Availability: As AI systems are increasingly used in digitalization, availability is becoming more and more important. Therefore, data must be stored redundantly to ensure availability.

Types of AI attack:

There are several types of attacks on AI systems:

- Manipulating real data (evasion attack): An evasion attack generates adversarial examples—inputs that look normal to humans but cause AI models to make incorrect predictions. These attacks exploit model weaknesses by testing various attack vectors and can be aided by model extraction to understand the target system. The transferability property means these examples can work across different models trained for the same task.

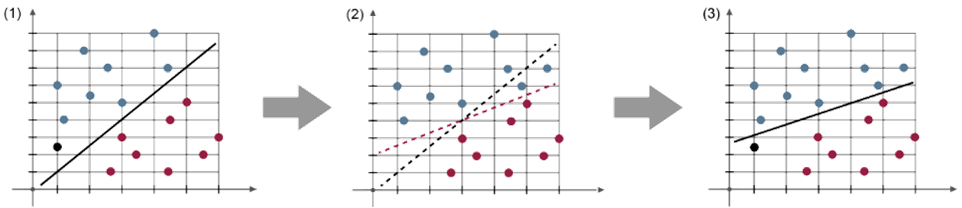

- Poisoning attacks: Attackers can introduce malicious data into an AI system’s training set, causing it to make incorrect predictions. For example, an AI system trained with poisoned data might misclassify legitimate emails as spam. In general, in a poisoning attack, an attacker inserts malicious samples into the training data to influence the decisions of the affected AI systems. This attack requires access to the training data, either indirectly (e.g. feedback loop) or directly. The attacker’s goal is to modify the AI model so that it makes false decisions in the attacker’s favor (see Figure 5).

Fig.5: Example of a Poisoning Attack (1) Normal classification of a new input (2) Manipulation of training data (3) Allowing an attacker to cause false classifications

Ultimately, the use of AI is based on trust

Given that the use of AI introduces new vulnerabilities and enables new attack possibilities on governments and companies, it seems logical to defend against criminal attacks at the same level—using AI to protect IT systems and infrastructures. While AI appears to be an effective means of defense in the context of IT security, it is necessary—like any use of AI—to discuss the extent to which its implementation is appropriate. [1]

To understand why this discourse is necessary and which research questions need to be addressed in this context, two scenarios from the field of IT protection are provided as examples:

1) Privacy vs. the common good: the problem of data protection

AI’s effectiveness in detecting attacks depends on access to security-relevant data, including personal information, which may violate individual privacy rights under GDPR. This creates an ethical dilemma between protecting privacy and ensuring societal safety. A potential solution could involve defining specific criteria for when individual rights may be subordinated for the common good.

2) Strike back: the problem of incomplete data

The construct of the “strike back” is based on the hypothesis that an attack can be ended by a counterattack. AI can calculate the strike back and carry it out automatically.

However, this raises ethical concerns regarding AI’s ability to access complete data for balanced decision-making. Automated counterattacks carry the risk of causing unintended harm, such as disabling critical infrastructure like hospitals, highlighting the urgent need for research into trustworthy AI systems and the importance of maintaining human oversight in critical applications.

Given the uncertainty about whether AI systems can be a perfect solution, this leads to the conclusion that, especially given the complexity and rapid development of the field, it is appropriate to always include a human as a controlling factor in critical applications, following the principle of “Keep the human in the loop.”

Summary and outlook

Artificial intelligence is a very important technology in the field of IT security. Detecting attacks, threats, vulnerabilities, fake news, as well as supporting and relieving IT security experts all play a crucial role—but so do secure software development and other areas.

It is clear that hackers attack data, algorithms, and models in order to manipulate results. For this reason, the protection of AI systems is of great importance.

Attackers use AI very professionally and successfully for their attacks. As such, the defenders should now use AI much more intensively and extensively to be able to defend themselves better.

[1] U. Coester, N. Pohlmann: “Ethics and Artificial Intelligence – Who makes the rules for AI?”, IT & Production – Journal for Successful Produktion, TeDo Verlag, 2019

Norbert Pohlmann is a Professor of Computer Science in the field of cybersecurity and is Managing Director of the Institute for Internet Security - if(is) at the Westphalian University of Applied Sciences in Gelsenkirchen, Germany. He is also Chair of the Board of the German IT Security Association TeleTrusT, and Board Member for IT Security at eco – Association of the Internet Industry.