Redefining Data Centers for the Edge

How is data center design and redundancy evolving for edge environments? Independent consultant Gerd Simon looks at bringing data to life at the edge.

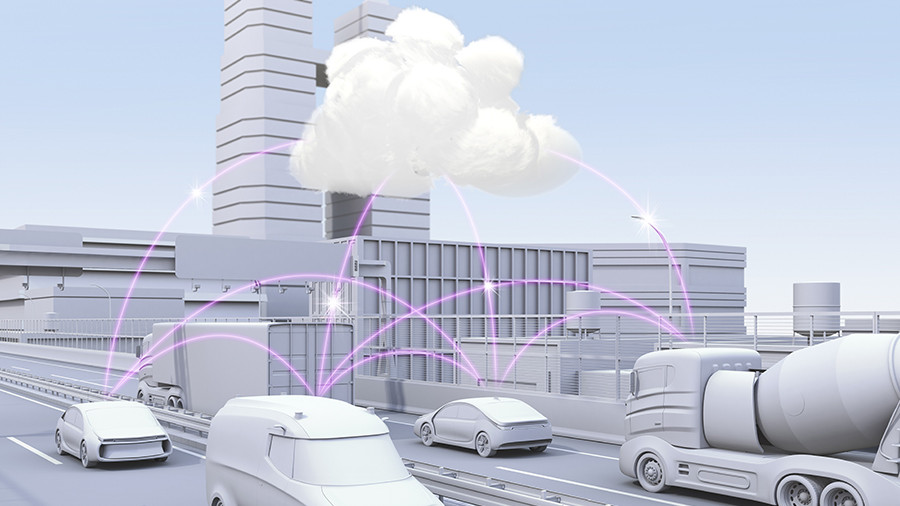

© metamorworks | istockphoto.com

The move to the edge of the Internet

The trend we had even five years ago when we said “media is moving to the edge” has now advanced to the point where “everything is moving to an edge”. But what does an edge look like? An edge can be everything that is close to the use case, close to users, regardless of whether it’s a human or a machine.

An edge can be a regional or even the local data center in the city, in a region – in the automotive industry, the edge is the IT in the car. I would say that the name data center captures the essence of what’s involved rather well: It’s not the center that chooses the data; it’s the data that chooses the center where they want to be processed. It’s not the center that defines the workloads. It is the data that defines where it wants to be computed. This is often forgotten.

The differentiating factors of edge data centers

What is an edge data center? An edge data center may to all intents and purposes look and feel like the standard data center. The difference is that an edge data center is built in an application-centric and use case-centric environment. It is a data center that is a usable digital infrastructure platform. The size of these data centers can vary from being really, really small – like micro data centers – up to several megawatt. Why is this such a broad range? Because the user is in the middle of this development, and this is a big shift in the mindset of those who developed data centers in the past.

In the past it was more a provider-centric setup – the data centers were purpose built, often the bigger the better. And there’s still substantial room for these data centers in the future. But the data gravity – not least pushed by Internet Exchange developments all around the globe, including DE-CIX – has shown that the fabric of the Internet is changing. The Internet in its current form and structures doesn’t work for low latency requirements, because it was never built for that. And the data deluge is here. As a result, these edge data centers are beginning to mushroom everywhere.

In terms of the building requirements, the paradigm has shifted away from the traditional quality characteristics embodied in a Tier 3 or Tier 4 environment. It’s no longer a differentiator to climb up the tier ladder. Instead, the edge data center is a redundant infrastructure that supports the users in the way they want to let their data live, because the future developments will be application-centric and use case-centric.

The Tier 3 and Tier 4 Uptime Institute guidelines just calculate the remaining risk you have in terms of design and operation. The truth is that, regardless of what we build in terms of physical redundancies, the main risks in the data center operation remain as follows:

- UPS (uninterruptible power supply) is the issue which causes the most trouble globally.

- Next comes human error, causing the second largest amount of trouble.

- And then there is the network trouble: that is, that you have the connectivity outages.

These are the main three areas, counting for about 75 percent of all risk exposure.

Data and software-redundant infrastructures – the new paradigm

Edge data centers deal with that risk exposure on the basis of lessons learnt from the experiences of hyperscale developments. Rather than building redundant power supplies, you build software-redundant systems. In one block, you have the software processed, and then – because data travels – you have the same in another edge. This makes you data and software redundant. If one software stack fails, then it’s shut down, but the data and the software keep on processing somewhere else. It’s distributed, not siloed.

This is a big challenge for those now investing in data centers, because the investors have so far only understood what it means to invest in a single data center. Now they need to invest in a “bundle” of data centers which are distributed.

Let’s have a look at a couple of examples of edge arrays, of a range of sizes:

- If I want to be connected to the Deutsche Börse (the German stock exchange), then I have less than 10 data centers to choose from as proximity partners. That’s a small example – that is the Deutsche Börse’s edge. It’s only available in Frankfurt. There’s no proximity partner elsewhere.

- If you take the edge of the Internet Exchange DE-CIX in Frankfurt, then it’s more than 32 data centers all around metropolitan Frankfurt and beyond. This means that the connected networks can get themselves geographically right where they need to be in the Rhine/Main metropolis. This is the technical edge of the DE-CIX interconnection platform for the Frankfurt location.

- If we consider autonomous driving, then we need infrastructures along the highway to communicate with the cars. Several experts have calculated that we might need a small data center around every kilometer. Because we have to keep track of the cars speeding over the highway. We have to keep the communication flow consistent along the way.

- Even the car itself is a data center – a mobile data center – because you don’t want to ever give away the trust factor to an outside infrastructure. You want to have it in your car, self-protected.

Latency awareness and latency sensitivity

When we talk about digital transformation to the next layer, it means that we don’t care so much where our data will be: the data will care where we are.

Today, we talk about cloud-native developments, referring to cloud-native protocols, cloud-native platforms, cloud-native applications – the list goes on. What we see in looking at cloud native is that the various protocols etc. are also highly distributed. However, they aren’t latency sensitive, nor are they geographically-aware or facility-aware environments.

When we talk about edge, we will see that the software developers are in particular creating edge-native applications. So why is that? Because the user of the data center is not a human. The user of a data center is data. And data is generated by coding, so coding matters, software platforms matter, applications matter. But the difference to all the developments we are seeing today is that it is real latency awareness and latency sensitivity that matter.

Investing in edge data centers

If you look at the Gartner hype cycle you clearly see that with the edge, we are on the rise on the very left hand side of the graph. That’s a very early stage. But if we talk about an “enterprise data center” or a “colocation facility”, I think we are already talking here about old paradigms.

If you put “edge” in your investment info memorandum, you will be of much more interest to investors than if you say “colocation data center”. There’s money flowing into the data center market, but all the players know that if they have something “edgy”, they can get it faster – and right now there is at least twice as much money in the edge data center market than in the other markets. So this is an investment hype. Why? Because of the cash flow expectations: Whatever is built, it’s application and use case-centric, and as a result, there’s always someone paying for it. A data center is a piece of technology, sure, it’s an infrastructure – but at the end of the day, it’s quite simply a cash flow-producing machine.

Who is going to earn the money out of it? Those who are the first movers in the market. Those who are customer-centric, application-centric, and use case-centric. The closer you are to your customer base, the better it is.

That brings me back to where I started: it’s not the center that defines the data, it is the data that looks for the center where it wants to be processed. That’s why we call it a “data center”. In the future, we should move to calling them “application centers” – or, even more specifically, “information centers”. But an information center not only requires data, it also needs context: information is the result of data plus context. The edge is just another step towards the development of such information centers. At the end of the day, machines and humans want information. Data is just the raw material for getting there.

Gerd Simon is a trusted adviser for digital infrastructure investment, an auditor, a senior analyst, and business leader. For more than 25 years, he has been creating and enabling digital infrastructures, mainly in Europe but also further afield. His focus was and is to create GTM models and also to take care of business strategy implementations, developing business conceptions from scratch through strategic and operational business development. He has been working in TMT markets since the mid-nineties and has a broad network in the Internet, cloud, and data center data area.

Please note: The opinions expressed in Industry Insights published by dotmagazine are the author’s own and do not reflect the view of the publisher, eco – Association of the Internet Industry.