The Internet of the Future – What happens behind the scenes?

How will the Internet evolve to keep pace with digital innovation? Christoph Dietzel from DE-CIX offers his vision of the Internet of the future.

© WangAnQi | istockphoto.com

In our digitalized world, the Internet has long since become a critical infrastructure. Ever larger data streams flow indefatigably around the globe. For the technologies of the future, like artificial intelligence, virtual reality, and many others, interconnection is the indispensable foundation. Digitalization without networks is not possible. This is why it is so important that we place focus on the future of the Internet – also at the level of the basic infrastructure.

Innovation and the data explosion

On the user side, in the past few years we have seen accelerating development of Internet usage. This ranges from the massive expansion of online shopping, to the ever greater distribution of mobile devices, and on to streaming services – there is hardly any area of daily life that could not somehow be connected to the Internet. While all this is going on, what is happening in the “backend” of the Internet, behind the scenes in the data center?

At first glance, the developments here might seem rather unspectacular. It may well be difficult for non-specialists to tell the difference between a data center from 1998 and one from today. One difference that is still relatively obvious is the disappearance of copper: fiber optic cabling is used almost exclusively today.

The continued development of the foundation of the Internet can be compared to electricity: You don’t need a “different” type of power depending on whether you’re operating a simple light bulb or a state-of-the-art factory – you just need a lot more of it. In principle, this is the same with Internet traffic: New applications do not produce a new type of traffic, but as a rule, they do produce much more of it. In addition, the newest, most innovative applications are raising the bar: Only very low latencies and absolutely secure transmission will do justice to the coming generations of applications.

Fiber optic to remain the standard

Currently, in data centers around the world we are seeing a “scale-out approach”, which means that existing infrastructures can be expanded horizontally to keep up with the growing requirements. To optimize the usable space within data centers, there is a lot being done on increasing the integration of the transmission technology, as well as accelerating automation through robots that can work in extremely confined spaces. Completely new, revolutionary approaches for the entire operation are already being drilled academically – e.g. data centers that are designed as a clean room and in which the communication no longer takes place via fiber-optic cables, but instead through light impulses that are reflected on a mirrored ceiling.

In 2017, Austrian and Chinese researchers succeeded in establishing a data connection encrypted through quantum cryptography. This method exploits the effect of entanglement at the particle level. A quantum key is generated from photons connected in this way, which is then sent from a satellite to the sender and recipient. Any attempt to intercept this key will be noticed by both parties, thanks to the entanglement effect. The transmission can then be aborted. However, in this method, only the keys are exchanged via satellite, while the data traffic continues to be transferred via traditional cabling.

But we see enormous advances in transmission technology – 400GE ports are already in use at DE-CIX today, for example. The step to the 1000GE-mark is also only a question of time – the planning and standardization for this within the IEEE is already in progress. So it is possible to say that the foundations of the transmission technology will experience a further innovative evolution in the foreseeable future, but will not be displaced by a completely novel, revolutionary technology. In this context, we will scale up the existing technology even further. To achieve this, we can fall back on the tried and tested means of replication, similar to what already happens with processors. With the end of Moore’s Law, the optimization of the core processor approached its limits, which meant that multiple core processors started coming onto the market. The same approach is also being taken in network technology – fortunately, wafer-thin glass fibers can be very easily replicated.

Think globally, surf locally?

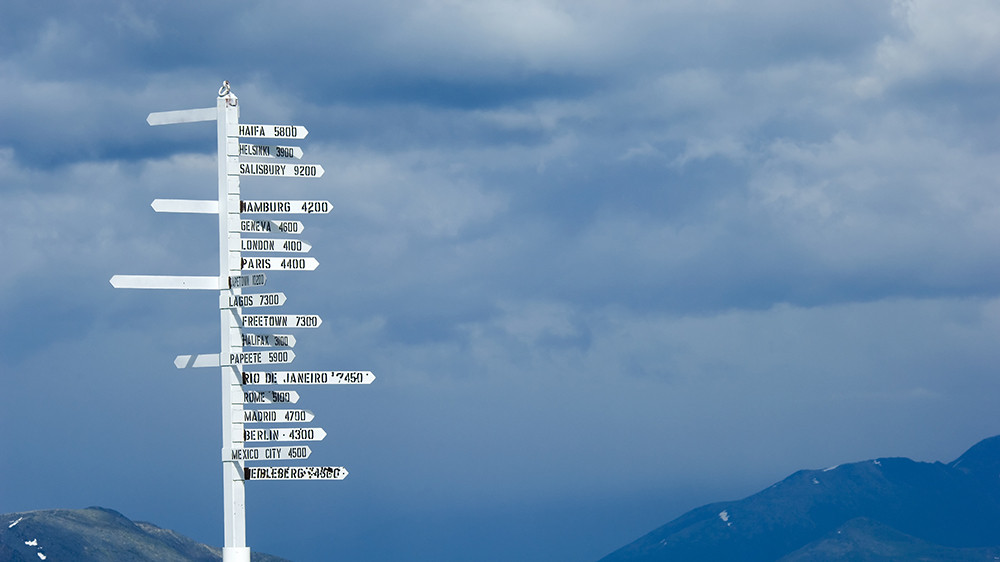

The Internet and globalization belong together. Since the Web revolutionized communication, the world has been shrinking and converging faster and faster. And yet, precisely to support the future development of the Internet, we need to think more locally. Applications like Virtual Reality and 8K content require increasingly large data volumes, but at the same time demand increasingly low latencies. For Virtual Reality applications, these lie in the range of 20 milliseconds – to put that in perspective, the blink of an eye takes 150 milliseconds. If we wish to implement such applications on a broad scale, physics forces us to bring the data closer to the user. Since Albert Einstein, we know that nothing in the universe can move faster than light – meaning data can’t either. The enormous speed of 300,000,000 meters per second would still be too slow to host VR content in the USA and play it in Germany without experiencing judder.

In the past 10 years, the structure of the Internet has already changed – providers already have their own equipment for caching content in the networks that serve end-customers, and in this way they bring their data closer to the consumer. What is happening today in regional telecommunication hubs like London, Amsterdam, and Frankfurt, would also need to be expanded in terms of area and density, also in rural areas, for large-scale VR usage, e.g. in autonomous vehicles.

Fast developments and slow standardization

The Internet of Things (IoT) is without question at the beginning of its golden age. Various prognoses forecast that the number of connected devices could exceed the 20 billion mark as early as next year and could reach 50 billion by 2022. Even today, there are possibly already more connected devices on the planet than there are people.

And for all this, we are basically using just one network protocol: de facto, the entire Internet is based on the Internet Protocol (IP). The IPv4 standard, still dominant today, uses 32-bit addresses, meaning that there are 2 to the power of 32, or around 4.3 billion different addresses possible. This makes it possible to allocate an IP address to every second person, not to speak of the many computer systems that need to access the Internet. Of course, this has been known for a while now, and a new 128-bit format has in principle existed since 1998. This new IPv6 standard offers an address space of 2 to the power of 128, or around 340 sextillions. With this, there would be no need to worry about running out of IoT addresses. However, measurements at DE-CIX show that currently only around 5 percent of traffic corresponds to the new standard.

In order to structure the bits that move through the Internet infrastructure, and to interpret them on the other side, there needs to be standardization of the protocols in use. This takes place for the Internet within the Internet Engineering Task Force (IETF). Change-overs and rollouts in the Internet take so long because the various actors need to find consensus, sometimes in conflict with their financial and political interests. A technological solution for this problem could be the freely programmable network equipment which is starting to appear on the market. In contrast to the current generation, in which merely a configuration of the standardized protocols occurs, in the coming generation the processing of data streams and packets can be defined by means of software. This makes it possible in the mid-term for subnetworks to develop their own protocols and use these in closed domains.

Summary - The Internet of the future

We are at the beginning of a new era which will be characterized by digitalization and the constant interconnection of everything. The goal of the next generation of the Internet is, through abstraction and automation, to enable any desired bandwidth spontaneously between any and all participants or data centers. For this, consistent further development of the existing technologies is required, and additionally, new approaches to the integration of infrastructure, software, and services must be conceived. Efficient data processing is becoming increasingly important – in the future, it is conceivable that analysis will already occur during the transmission process in the network.

Christoph Dietzel has been Head of the Research & Development Department at DE-CIX since 2017. Previously, he was a part of the DE-CIX R&D team and responsible for several research initiatives, including numerous projects funded by the public sector (EU, German Federal Ministries). Chris is a PhD student at the INET group, supervised by Anja Feldmann at the Technische Universität Berlin and has published at various renowned conferences and for journals including ACM Sigcomm, ACM IMC, IEEE Communications Magazine, and IEEE Journal on Selected Areas in Communications.

His ongoing research interests focus on Internet measurements / security, routing, and traffic classification. Chris is also highly interested in IXP-related aspects of the Internet ecosystem.

Please note: The opinions expressed in Industry Insights published by dotmagazine are the author’s own and do not reflect the view of the publisher, eco – Association of the Internet Industry.