Reducing the Bill for Powering the Digital Economy

For both the environment and company OPEX, power consumption cannot increase at the same speed as computing power. Judith Ellis explores what data center operators and company IT professionals can do to create efficiencies.

© 4X-image | iStockphoto

The more dependent we become on digital infrastructure for industry and society, the greater the drive to increase the processing capacities of the hardware supplying the computing power. The more powerful the computing capacity becomes, the more important it is to make it affordable through creating greater operating efficiency. The combination of increased capacity and greater efficiency drives demand – the more computing power our hardware has, the more software and data we can store and run on it; in turn, the more complex the software can become, providing us with even greater chances for optimization of everything from our supply chain management and maintenance to our lifestyle and shopping habits. The more use we make of these storage and computing capacities and bandwidth, the greater the need for even larger capacities becomes. And so the cycle continues.

Moore’s Law predicts that the computing capacity of hardware devices will double every 18 to 24 months. Gordon Moore made this prediction in 1965, and so far, he has been proven correct.

IT hardware manufacturers, software developers, data center suppliers, and infrastructure providers are all contributing to a constant increase in energy efficiency. Despite these gains, the growth in demand for computing power and data center services means that worldwide power consumption for IT is still increasing, but at a much slower rate in proportion (for more information, see the article by Dr. Ralph Hintemann, from the research institute Borderstep).

Measuring data center efficiency – the PUE

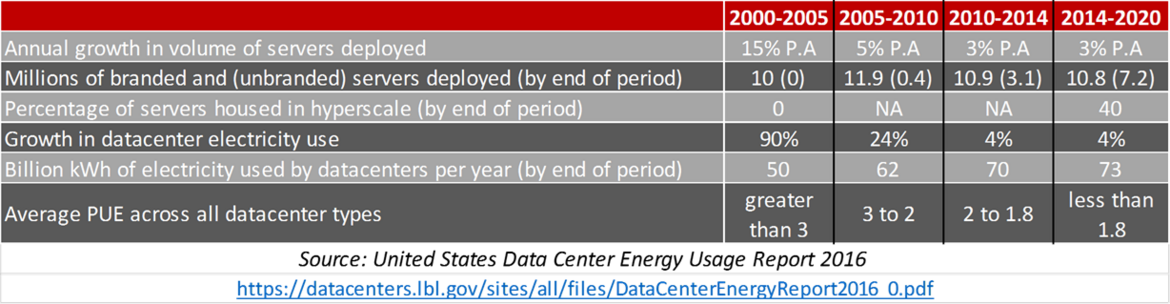

The foundation of all this potential for optimization is the data center industry – and this industry is more aware than most of the importance of reducing energy consumption. For all the efficiency gains in IT equipment since 2000, the optimization of power consumption in the area of data center facility management has been far greater, as is demonstrated by the continual decrease in PUE (Power Usage Effectiveness, a metric devised by the Green Grid consortium) over the last few years.

The PUE of a data center describes the relationship between power consumed by the IT equipment (designated 1) and the power consumption of the data center facilities, such as cooling, emergency power supply, etc. (the remaining value making up the PUE). That means that a data center with a PUE of 2 consumes equal amounts of power for the IT being housed within it and for the facilities keeping that IT secure and cool. Google explains how they calculate the PUE of their data centers here: www.google.com/about/datacenters/efficiency/internal/. What has happened over the last years is that the PUE of data centers has continually decreased, so that the facilities are responsible for only a fraction of the power consumption of the IT (a PUE of 1.5 means that the facilities only use half as much power as the IT housed within the data center, or in other words, 1/3 of the overall power consumption). And given that the PUE is a value relative to the efficiency of the IT, as IT has become more and more efficient, so too – to an even greater extent – have the facilities.

Keeping cool? Cutting costs for cooling IT hardware

For a data center, one of the greatest challenges is how to best cool the sensitive IT equipment. Approaches to efficiency have taken two complementary paths over the last few years. The one is to find more energy-efficient cooling methods – for example, using water for evaporative cooling, (e3 Computing or Host Europe in Strasbourg) or using outside air (“free cooling” - especially effective in cold climates, making geographical regions like Western Europe attractive data center locations) (for more on cooling, see the interview with eco's Roland Broch, "Innovative Approaches to Energy Efficiency in Data Centers") – and to design and construct the data center IT space in such a way that the cooled areas are isolated from other uncooled areas, creating cold and warm aisles between the server racks (read the article "What a “Green” Data Center Does To Save Energy", by Alex Bik from BIT BV).

The other is for hardware manufacturers to increase the tolerance of IT equipment to warm air (meaning that free cooling would become more feasible in a greater range of climatic conditions). Current ASHRAE recommendations are for a temperature of 27 degrees Celsius in the cold aisles, and the hope is to be able to further increase the temperature threshold in future to 30 degrees and higher. The higher the temperature can be taken, then the more geographical regions will be able to make use of free cooling – offering the double environmental advantage of greater efficiency in a larger range of climatic conditions.

Double benefit – DC optimization good for the bottom line and the environment

Further efficiencies can be made through the use of Data Center Infrastructure Management software, which can monitor and optimize power consumption in the facilities. Several data center certification programs and initiatives support and encourage data center energy efficiency, including the EU Code of Conduct for Data Center Energy Efficiency (listen to the interview Bruno Fery, from ebrc, as he explains the challenges and benefits of the Code of Conduct) and the eco Datacenter Star Audit Green Star for Energy Efficiency (see the interview with eco's Roland Broch, "Innovative Approaches to Energy Efficiency in Data Centers").

The result of all this is that the cloud, which is basically infrastructure housed in a professional data center, is able to capitalize on the combined energy efficiency of hardware, software and data center facilities. This means that the cloud can offer greatly reduced power consumption in comparison to company internal data centers, which are generally not so stringently optimized. According to Dr. Béla Waldhauser, company internal data centers tend to also be very conservative in their approach to acceptable temperatures in their cold aisles, preferring to cool to around 22 degrees and generally requiring refrigeration technology to achieve this.

Tips for companies on reducing operating costs and carbon footprint

Whether choosing to keep your IT and data center in-house or locating it at a commercial data center, consider the following recommendations:

- Invest in new IT infrastructure, with much greater energy efficienc

- Invest in software to monitor power consumption and to manage facilitie

- Migrate whichever storage and processing tasks possible to the clou

- Implement hot and cold aisle containmen

- Increase the temperature of cold aisles to the recommended 27 degree

- Use free cooling – outside air to cool the data center

- Ensure the load on servers is in line with the power and cooling densities of the data center (so that the data center is neither over-engineered – and therefore inefficient – nor under-engineered – and therefore requiring expensive upgrades)