Freedom and Control of Data in the Multi-Cloud

Wally MacDermid, VP Cloud Business Development at Scality, on how companies can manage their multiple cloud services, avoid lock-in, and maintain data protection compliance.

© Pattadis Walarput | istockphoto.com

What is the concept behind the term “multi-cloud”?

Before cloud existed, companies had many different products from many different vendors in their data centers. They had products from IBM, Oracle, Sybase, Microsoft, SAP – the list simply went on and on. This effectively meant that, 30 years ago before cloud, our data centers were multi-vendor. If we now fast forward, we find companies using Salesforce and LinkedIn and AWS and Azure and Google – and again, the list goes on and on. There are thousands of cloud services from numerous different vendors. So the IT landscape of enterprises today are inherently “multi-cloud”. It used to be multi-vendor in one’s own data center, but now it's multi-vendor in their own data center and, on top of that, multi-cloud.

So when we're dealing with the myriad of options for cloud storage services that companies might be accessing, what are the key differences between the different providers?

Multi-cloud is an extremely vague and overly-used word right now and it can really mean different things, depending upon whether you’re talking about a SaaS application, or virtual machine portability, or cost management across clouds. When Scality says multi-cloud, we mean multi-cloud data management. So we think about Amazon S3 and Azure Blob storage and Google cloud storage and then obviously, Scality RING.

So, just to give you a bit of background, Scality is a software company that has been in operation since 2010. We're a leader in the Gartner Magic Quadrant and the IDC marketscape reports for object and file storage. The product that we've been selling since 2010 is called RING, which is a highly scalable on-premises storage software platform. To date, we've had great success with this product. We have over 200 global, very large customers, who use RING for petabyte scale storage – that means, for exceptionally large implementations.

Our current big news is the release of a second product called Zenko. The development of Zenko was propelled by our RING customers; those we've been working with for the past eight plus years, who signaled that, while they would continue to store a majority of their data on-premises – for a variety of reasons, such as security, performance etc. – they nonetheless regard the public cloud as a very powerful option for a range of different reasons. For some customers, this comes down to the cheap storage they can get with archive in the cloud. Many customers also want to use the powerful compute services in the cloud for analytics or Artificial Intelligence. So they want to take some of their data that they store on-premises in RING, and push it up to the cloud to do something interesting, exciting, and valuable with.

To clarify the distinction between them: RING can be thought of as private cloud storage or storage for private clouds – in other words, RING involves data storage. Zenko, on the other hand, can be described as “storage-agnostic” data management. This means that, while Zenko can of course manage data on RING, it can also manage it on Azure, AWS, Google, Wasabi, or Digital Ocean.

And that’s where we come back to the multi-cloud: The key difference between different providers is that if you look at AWS S3, which is the leading cloud storage service, and then Microsoft Azure Blob storage, or Google cloud storage, they all have different APIs. It's analogous to English, French, and German all being different languages, meaning that if I travel to the UK, or to France or Germany – assuming not everybody in France and Germany speaks English – I need to understand and speak three languages. So, if I'm an application developer and my users or my application demands that I support both of, or even all three public clouds (S3, Azure, and Google), I need to write and understand three different languages. That’s expensive: it means that it takes me longer to get my application in the market, and it means it's more expensive to support in the long-term. And what Zenko essentially is, is a single API “on the way in”. So an application writes to Zenko, and for this purpose we selected the Amazon S3 API, because it's the most widely used in the industry and serves as a sort of defacto standard. And then Zenko translates that one API into multiple storage backends. To return again to the UK/ France/ Germany analogy: it's as if I'm travelling with a translator. So I can go anywhere I want, I speak one language, and then my translator translates it to the local region.

So what does that mean for companies? How does that solve for companies the challenges they are facing?

Companies don't want to have to pick a single provider, and again this was true thirty years ago, before cloud. People use products from Oracle and Sybase, from IBM and HP, or from Dell and Compaq – because they want a choice. They don't want to be locked into a single vendor – and the lock-in word is something we hear time and time again from customers. So the challenge is that large enterprises want the freedom to be able to pick Amazon for one project, Azure for a second project, Google for a third. But the challenge that creates is, if I do that, then my developers and my users have to speak three different languages and that can be complicated. So if you put Zenko in between your users and your applications and the cloud services they use, your applications and users then only have to speak one language. And Zenko takes care of all of the complexity and the differences between the backend services.

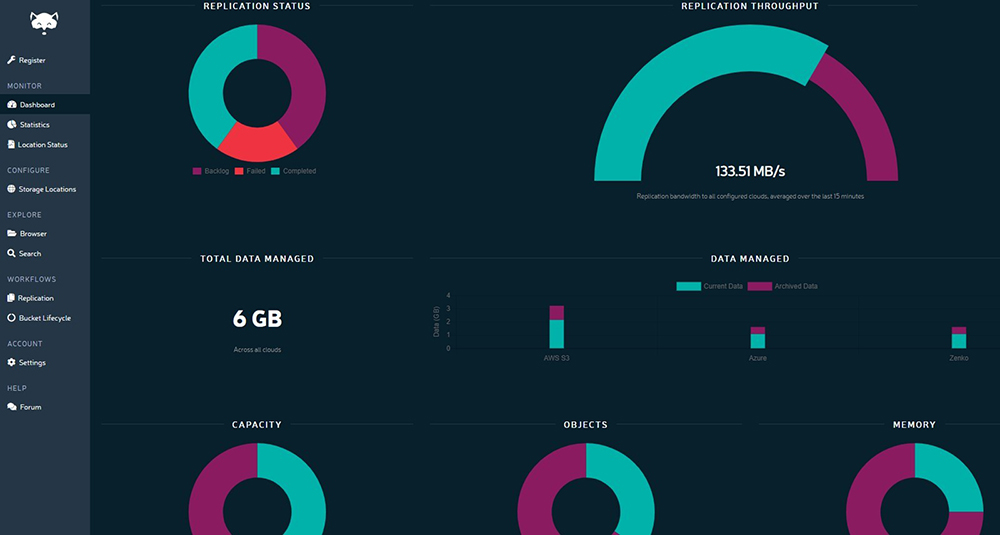

Zenko Dashboard © Scality

You actually deal with the vendor lock-in issue, but does it not create a new lock-in problem because we become dependent on the Zenko software?

No. There are two reasons why it does not lock somebody into Zenko. First, Zenko is available in both open source and an enterprise edition. Large enterprises love open source because, firstly, it doesn't lock them into a vendor. The code is out there so, should they choose to stop working with the vendor, and they want to use the code itself, they can. And the second reason is that when Zenko places data into a storage location – for example, when we put data into AWS or into Azure, or into Google – we don't put it there in a Zenko format. It's there in that native format of that storage location. So if Zenko goes away, you still have full and complete access to your data.

How does multi cloud and data protection law compliance work together?

Zenko is all about the freedom and the control to understand where your data is and to put it wherever you want it. And with data compliance and the GDPR specifically, you often need to place data within the region where the owners of that data reside. So if you have, for example, a Scality RING in Spain (where we have customers) and there is data on that RING that belongs to a German citizen, the GDPR may compel you to move that data into the German region. Zenko gives you that ability. From a technical perspective, Zenko has metadata about all of the data that it manages, and that metadata, for example, could know that a certain object or file belongs to a German citizen or is created by an application that is used by a German citizen. And that metadata allows Zenko to identify that a specific piece of data that has just been written has a tag that says “store in Germany”. And so, instead of pushing it to the Scality RING in Spain, it would move to a storage location – it might be RING, it might be Amazon, or it might be Azure, or it could be another storage service – that's in Germany. This data mobility is something that Zenko enables. And again, the mobility work goes back to my analogy of a tourist going to multiple countries: If you understand the local language and customs, it's much easier for you to be mobile across multiple countries.

As Vice-President of Cloud Business Development, Wally MacDermid helps lead the product and partnering strategy for Scality’s multi-cloud solutions. Wally has spent his career in customer and partner-facing roles for companies in the virtualization, networking, storage, and cloud markets. A veteran of both successful early-stage startups (Motive, Onaro, vKernel) as well as industry-leading technology providers (Remedy/BMC, NetApp, Riverbed), Wally has spent the past 10 years of his career partnering with leading cloud providers including Amazon Web Services, Microsoft Azure, and Google.

Wally lives in San Francisco, California with his wife and their two sons.

Please note: The opinions expressed in Industry Insights published by dotmagazine are the author’s own and do not reflect the view of the publisher, eco – Association of the Internet Industry.