Keeping a Cool Head in the Data Center

Mustafa Keskin of Corning Optical Communications on how data centers can reduce noise levels and save electricity with liquid cooling systems.

©Oselote | istockphoto.com

When I last visited a data center in 2023, I first had to sign multiple documents, including non-disclosure agreement (NDA) papers and health and safety protocols, which we were required to comply with at all times. Eventually, I received access cards to visit my customer's data hall and was greeted by… noise, lots of noise. In the data hall, there was a concert of fans trying to cool down servers, network switches, power distribution units, and air-handling devices.

A typical data center has a noise level of 70-80 dB, but this can sometimes reach up to 90 dB. To put this in perspective, a typical human conversation takes place at 60 dB, a vacuum cleaner can be as loud as 75 dB, and an alarm clock can hit 80 dB. Continued exposure to noise above 85 dB can cause permanent hearing loss.

Data centers can get really hot because the electrical energy used by servers, storage devices, networking hardware, and various other equipment is converted into thermal energy. That’s why data centers require effective cooling systems and methods to manage temperature, ensuring the proper functioning and durability of their equipment. These systems transport heat energy from indoor IT spaces to the outdoor environment.

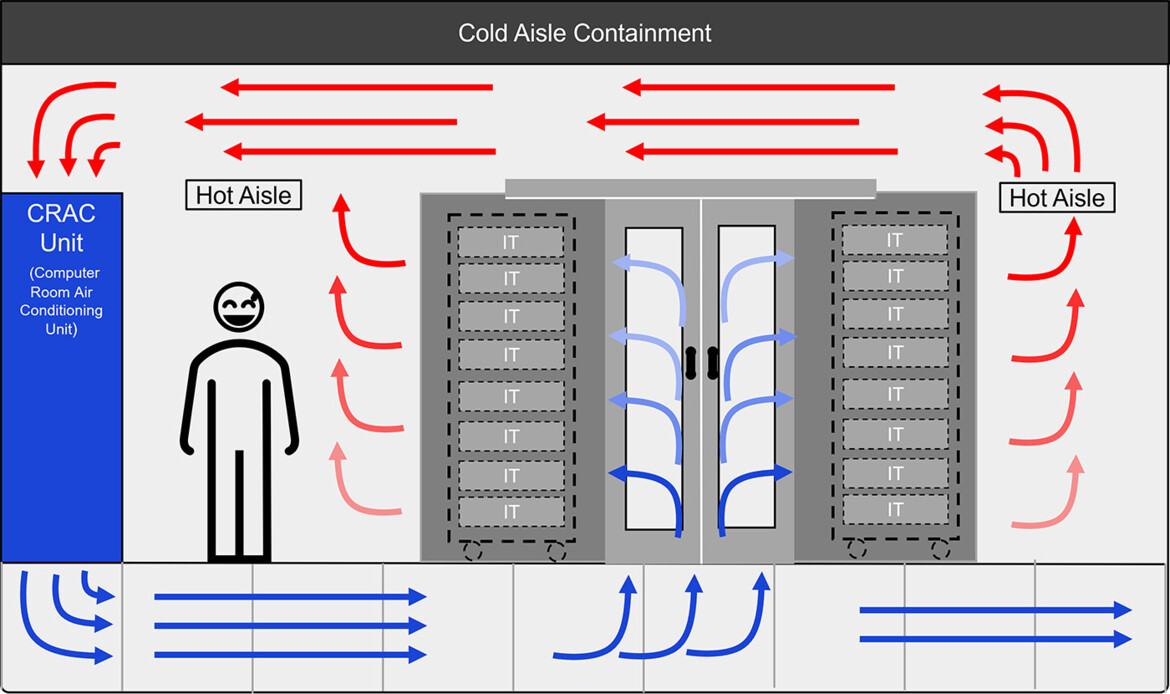

For years, air has been the primary medium for transferring heat. To disperse thermal energy, cool air must flow past every key component. This is achieved using high-performance fans and large heatsinks, which are the main sources of noise inside data centers. Cool air is first pumped through floor vents into the “cold aisle” of the data center. It then channels through server racks, transferring heat to the “hot aisle.” Finally, heated air is drawn into ceiling vents and chilled by computer room air conditioning (CRAC) units, renewing the cycle.

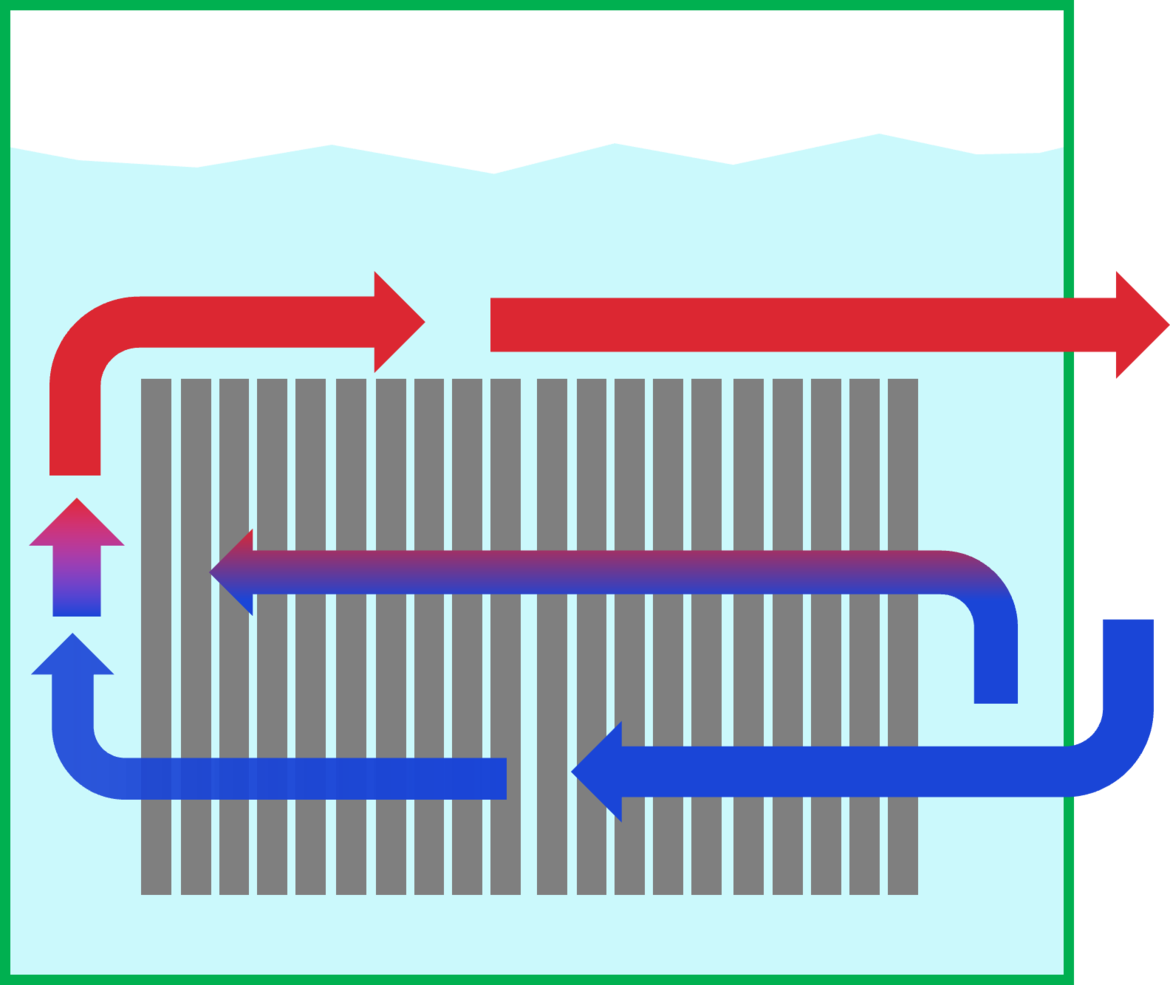

Figure 1: The schematic depiction of the thermal energy transfer cycle

With power requirements for server racks more recently increasing rapidly from 5-7 kW per rack to up to 50 kW, and in some instances, over 100 kW per server rack, the amount of heat generated by the IT equipment has also increased.

Traditional air-based cooling solutions cannot cope with this new reality due to the simple fact of the low heat transfer capacity of air molecules. Is there a solution that can?

The industry warms to liquid cooling

Liquid-based heat transfer agents are fast emerging as a viable solution across the industry. For example, water has 1,000 times more cooling capacity than air because water molecules are closer together. However, we cannot simply circulate water inside our servers or IT equipment as we do air, due to water’s conductive nature. But we can circulate water inside insulated pipes and around heat-generating Central Processing Units (CPU) and Graphics Processing Units (GPU) components using insulated heat-conducting plates. This method has been the most widely deployed solution recently. This kind of liquid cooling requires server racks equipped with vertical and horizontal liquid cooling manifolds, as well as servers and network switches that use liquid cooling plates instead of heat sinks. These will be connected to the coolant distribution unit using a sealed piping network.

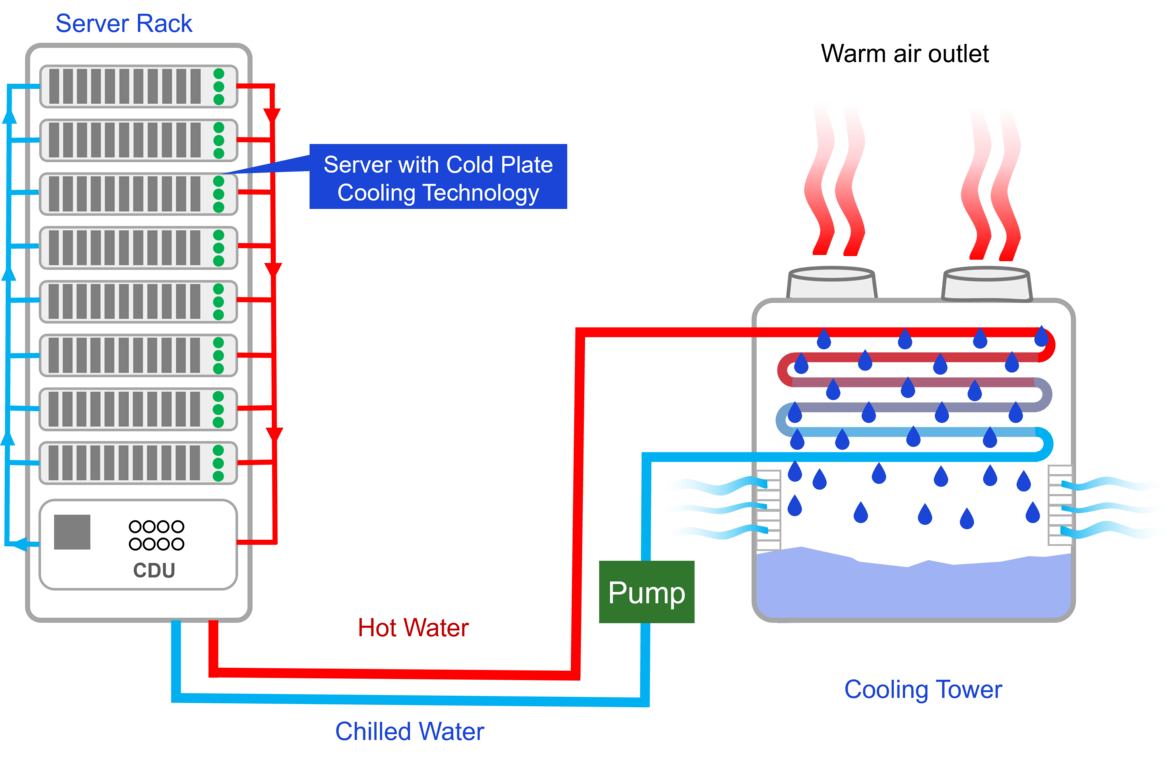

If we look inside a liquid-cooled server rack, we will see a lot of pipes and manifolds, which do not exist in air-cooled ones. You may be wondering what a manifold is. Essentially, it is a larger pipe that branches into several openings, which are then connected to the smaller pipes coming from the servers. A schematic depiction of this new cooling technology can be seen in Figure 2.

Figure 2: A liquid-cooled server rack with pipes and manifolds

We know that liquids are better than air in terms of heat transfer, so can we use this information to develop better cooling solutions? The industry has developed immersion cooling solutions in two flavors: single-phase immersion cooling (1PIC) and two-phase immersion cooling (2PIC).

In the 1PIC solution, servers are installed vertically in a coolant bath of a hydrocarbon-based dielectric fluid that is similar to mineral oil (Figure 3). The coolant transfers heat through direct contact with server components. The heated coolant then exits the top of the rack and is circulated through a Coolant Distribution Unit connected to a warm water loop which is connected to an outside cooling system. This type of cooling solution requires the usage of immersion-ready servers. Immersion cooling tank solutions also require a connection to an external pump and cooling tower solution, which will circulate the returned hot water from the system and transfer the heat to the outside of the data center.

Figure 3: In the 1PIC solution, servers are installed vertically in a coolant bath of a hydrocarbon-based dielectric fluid that is similar to mineral oil

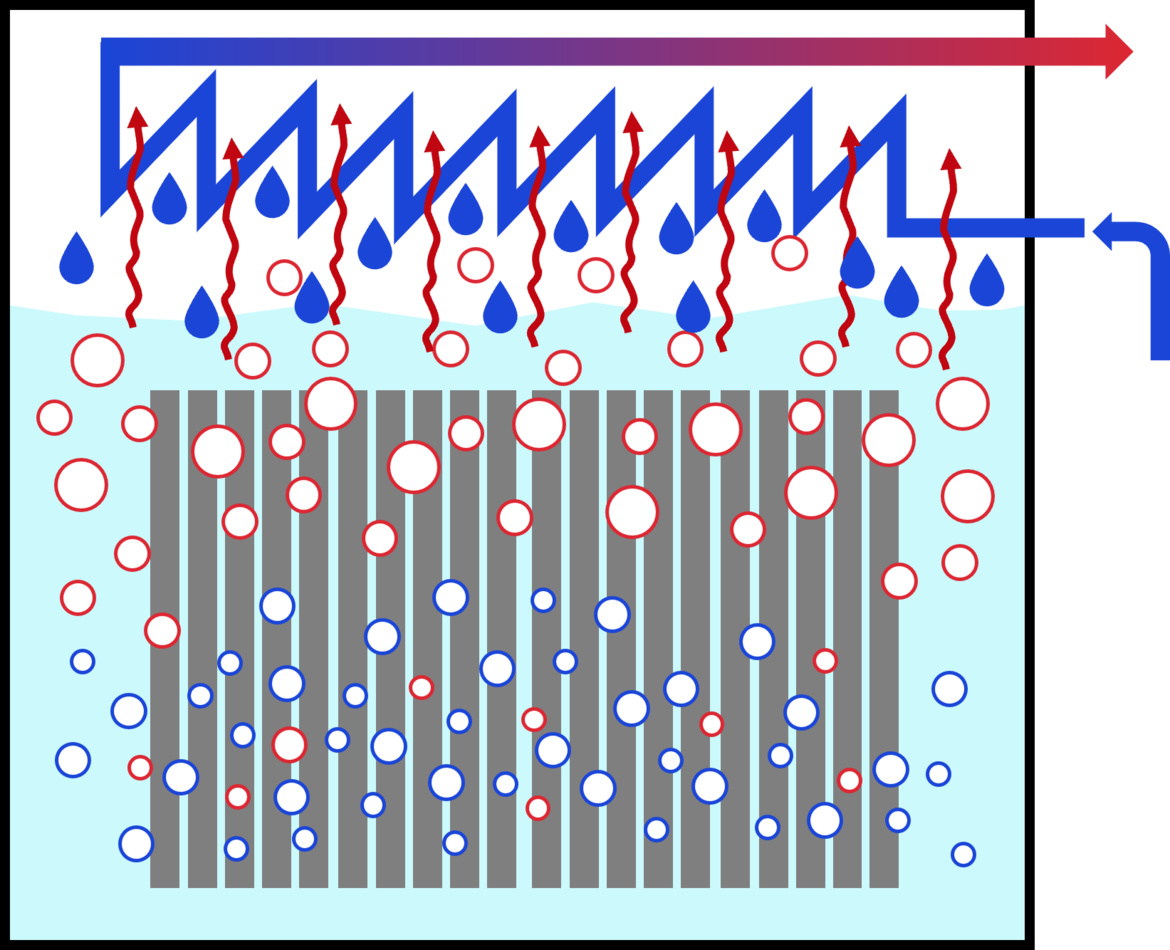

In 2PIC systems, servers are sealed inside a bath of specially engineered fluorocarbon-based liquid which has a low boiling point (often below 50°C) (Figure 4). The heat from the servers boils the surrounding fluid. The boiling of the liquid causes a phase change (from liquid to gas); hence it is named two-phase immersion cooling. The vapor is then condensed back to liquid when it reaches the cooled condenser coils. The condensed liquid drips back into the bath of fluid to be recircled through the system.

Figure 4: In 2PIC systems, servers are sealed inside a bath of specially engineered fluorocarbon-based liquid which has a low boiling point (often below 50°C)

To cut a long story short, if we look inside a liquid-cooled rack, we will see very little space remaining for structured cabling patch panels from cooling pipes and manifolds. In the case of immersion cooling solutions, it is better to keep optical connectivity components outside of the cooling tanks.

So, where can we mount our passive optical connectivity panels in our data centers if we want to use these new cooling solutions for our ever-heating server systems? At Corning, we have developed various 2U and 4U overhead mounting brackets suitable for wire trays and ladder racks to mount Corning EDGE and EDGE8 hardware where there is limited or no space within your server or network racks. One can mount the necessary optical patch panels in these overhead mounting trays and establish server connectivity by using short jumpers coming down to the server systems.

Regarding immersion cooling systems, it is also important to use cables that won’t degrade over time due to extended exposure to the liquids used inside these tanks.

They say liquid cooling can help reduce the electricity cost for an entire data center by up to 40% and up to 55%, and a reduction in the data center's server noise levels would also be a bonus. So far, I haven’t visited a data center facility that has implemented liquid cooling across the board, so I can’t confirm these numbers from personal experience. What’s clear, is that as the data center heats up, the case for liquid cooling systems grows ever stronger.

With over 19 years of experience in the optical fiber industry, Mustafa Keskin is an accomplished professional currently serving as the Data Center Solution Innovation Manager at Corning Optical Communications in Berlin, Germany. He excels in determining architectural solutions for data center and carrier central office spaces, drawing from industry trends and customer insight research.

Please note: The opinions expressed in Industry Insights published by dotmagazine are the author’s or interview partner’s own and do not necessarily reflect the view of the publisher, eco – Association of the Internet Industry.